This blog post is cross posted on arnaudpain.com and tech-addict.fr, as we (Arnaud Pain and Samuel Legrand) have decided to work together to present this topic in mulitple events in 2019.

We have decided to work on this presentation to help users understand how they can rely on Microsoft for their data protection.

Here after some more information on the implementation and our feedback.

What is Storage Replica

Storage Replica is Windows Server technology that enables replication of volumes between servers or clusters for disaster recovery. It also enables you to create stretch failover clusters that span two sites, with all nodes staying in sync.

Supported configurations

Stretch Cluster allows configuration of computers and storage in a single cluster, where some nodes share one set of asymmetric storage and some nodes share another, then synchronously or asynchronously replicate with site awareness. This scenario can utilize Storage Spaces with shared SAS storage, SAN and iSCSI-attached LUNs. It is managed with PowerShell and the Failover Cluster Manager graphical tool, and allows for automated workload failover.

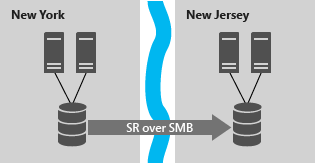

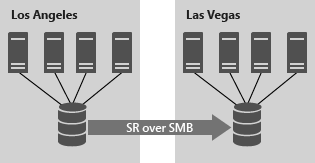

Cluster to Cluster allows replication between two separate clusters, where one cluster synchronously or asynchronously replicates with another cluster. This scenario can utilize Storage Spaces Direct, Storage Spaces with shared SAS storage, SAN and iSCSI-attached LUNs. It is managed with Windows Admin Center and PowerShell, and requires manual intervention for failover.

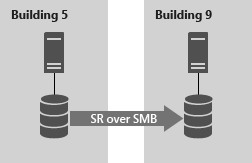

Server to server allows synchronous and asynchronous replication between two standalone servers, using Storage Spaces with shared SAS storage, SAN and iSCSI-attached LUNs, and local drives. It is managed with Windows Admin Center and PowerShell, and requires manual intervention for failover.

The Lab

We decided to work with a Cluster to Cluster configuration for the purpose of this article with:

- 2 Datacenter : 1 in USA and 1 in France

- VPN connection between DC using pfSense

- 1 Host with VMware 6.7 U1 in each DC

- 1 AD + 2 SSD servers in each DC

Storage Spaces Direct Installation

First of all you will need to define if you want to use a File Share Witness or Cloud Witness for the cluster:

As we decided to use a Cloud Witness Account, there are some prerequisites on the SSD servers. Run the following commands in an Elevated PoSH on each node:

Install NuGet Repo: Find-Module -Repository PSGallery -Verbose -Name NuGet

Install Azure Module: Install-Module Az

You will then need to restart each nodes

Here after are the PoSH commands to run

$nodes = ("server-1", "server-2”)

icm $nodes {Install-WindowsFeature Failover-Clustering -IncludeAllSubFeature -IncludeManagementTools}

icm $nodes {Install-WindowsFeature FS-FileServer}

Restart each node

Test-Cluster -node $nodes

New-Cluster -Name Cluster-Name -Node $nodes –NoStorage –StaticAddress Cluster-IP

Connect-AzAccount

Set-ClusterQuorum –CloudWitness –AccountName Cloud-Witness-Account -AccessKey Key-1

Enable-ClusterS2D -SkipEligibilityChecks

New-Volume -StoragePoolFriendlyName S2D* -FriendlyName US-Storage -FileSystem CSVFS_REFS -Size 30GB

New-Volume -StoragePoolFriendlyName S2D* -FriendlyName US-Storage-Logs -FileSystem ReFS -Size 5GB

Get-ClusterSharedVolume

Restart each node

SOFS Role Configuration

We will install the Scale-Out File Server role, however it requires some stuff to be done before. In fact, you will need to ensure that the created Cluster has the permission to create Computer on the OU where it resides:

Here after the steps to install SOFS role

After the installation of the SOFS role, the next step is to create File Share, however based on you configuration you will need to wait replication to occur before continuing.

Here after the steps to add File Share

Storage Replica installation and configuration

Before starting the Storage Replica steps, you will need to have created the other Cluster.

In our example, we need all the above steps to be done on Samuel infrastructure in France.

Here after are the PoSH commands to run

$nodes = ("server-1", "server-2”)

icm $nodes {Install-WindowsFeature Storage-Replica -IncludeManagementTools -restart}

In an elevated Command Prompt run the following command on each node:

netsh advfirewall firewall add rule name=PROBEPORT dir=inprotocol=tcp action=allow localport=59999remoteip=any profile=any

Run the above PoSH commands on 1 node

$ClusterNetworkName="Cluster Network 1"

$IPResourceName="Cluster IP Address"

$ILBIP="10.0.0.153"[int]$ProbePort=59999

Get-ClusterResource$IPResourceName|Set-ClusterParameter -Multiple@{"Address"="$ILBIP";"ProbePort"=$ProbePort;"SubnetMask"="255.255.255.255";"Network"="$ClusterNetworkName"; ”ProbeFailureThreshold”=5;"EnableDhcp"=0}

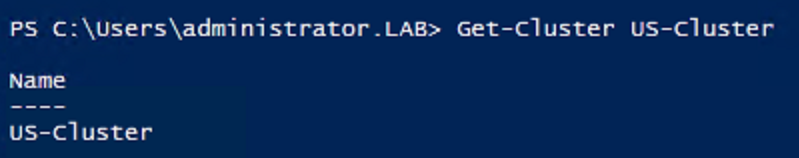

After the above operation done on both Cluster, you will need to ensure that both clusters can connect/communicate:

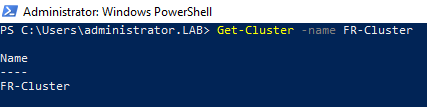

Get-Cluster -NameUS-Cluster (ran from fr-server-1)

Get-Cluster -Name FR-Cluster (ran from us-server-1)

On US SSD server:

Within Failover Cluster Manager console, ensure that US-Storage and US-Storage-Logs is owned by local server and is not assigned to Cluster Shared Volume.

Within Computer Management console, assign drive letter L: to US-Storage-Logs

Note: Verify that you have a C:\Temp folder on the source and destination Computers (if not create it)

Run the following PoSH command:

Test-SRTopology -SourceComputerName US-Cluster -SourceVolumeName C:\ClusterStorage\US-Storage\ -SourceLogVolumeName L: -DestinationComputerName FR-Cluster -DestinationVolumeName C:\ClusterStorage\FR-Storage\ -DestinationLogVolumeName L: -DurationInMinutes 5 -ResultPath c:\temp

On FR SSD server:

Within Failover Cluster Manager console, ensure that FR-Storage and FR-Storage-Logs is owned by local server and is not assigned to Cluster Shared Volume.

Within Computer Management console, assign drive letter L: to FR-Storage-Logs

Run the following PoSH command:

Test-SRTopology -SourceComputerName FR-Cluster -SourceVolumeName C:\ClusterStorage\FR-Storage\ -SourceLogVolumeName L: -DestinationComputerName US-Cluster -DestinationVolumeName C:\ClusterStorage\US-Storage\ -DestinationLogVolumeName L: -DurationInMinutes 5 -ResultPath c:\temp

On US SSD server:

Grant-SRAccess -ComputerName us-ssd-01 -Cluster FR-Cluster

On FR SSD server:

Grant-SRAccess -ComputerName fr-ssd-01 -Cluster US-Cluster

On US SSD server:

New-SRPartnership -SourceComputerName US-Cluster -SourceRGName US -SourceVolumeName c:\ClusterStorage\US-Storage\ -SourceLogVolumeName L: -DestinationComputerName FR-Cluster -DestinationRGName FR -DestinationVolumeName c:\ClusterStorage\FR-Storage\ -DestinationLogVolumeName L: -LogSizeinBytes 4GB

Note: As our Log disk is less than minimum requirements (which is at least 8GB), we need to specify the -LogSizeinBytes parameters)

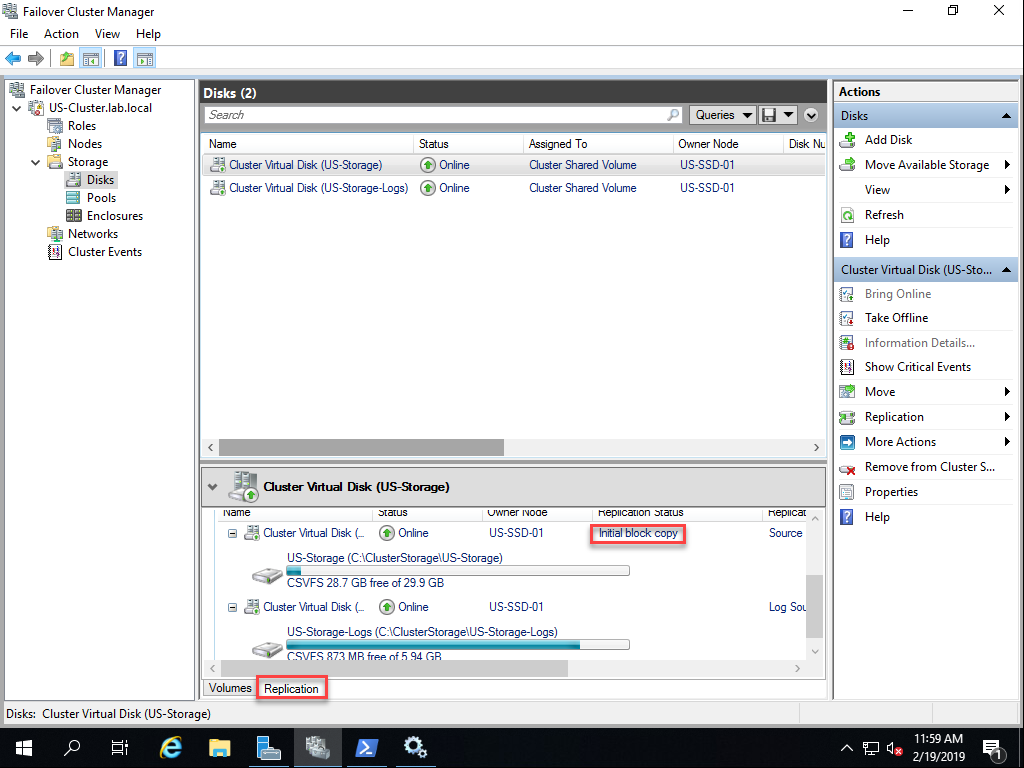

During the initial synchronization, the replication status is Initial block copy

Change Replication Mode

As we have both site in US and France with a latency which is medium/high, we decided to switch Replication Mode from Synchronous to Asynchronous.

To do so, we ran the following PoSH command

Set-SRPartnership -ReplicationMode Asynchronous -SourceComputerName US-Cluster -SourceRGName US -DestinationComputerName FR-Cluster -DestinationRGName FR

To validate the change, you can run the PosH command Get-SRGroup

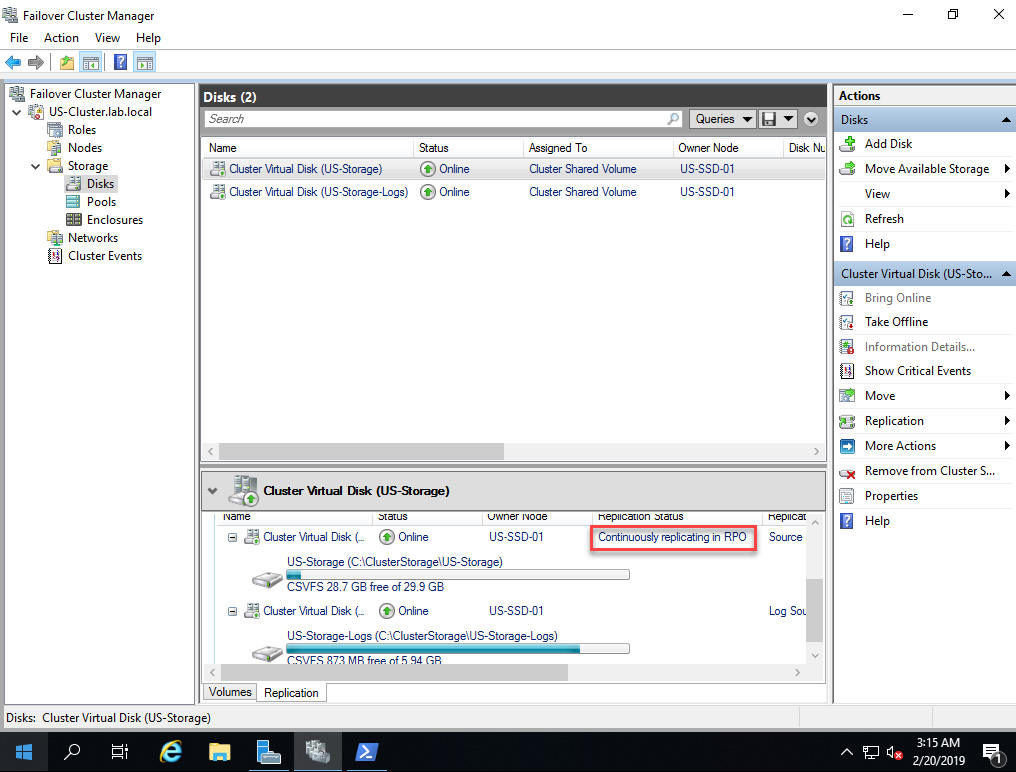

Now that replication is enabled, if you open the FailOver clustering management, you can see that some volumes are source or destination. A new tab called replication is added and you can check the replication status. The destination volume is no longer accessible until you reverse storage replica way.

The first status will be Initial bloc copy

Validation of replication

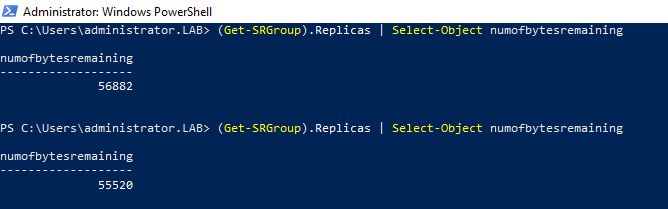

Status of the replication can be checked using the following command

(Get-SRGroup).Replicas | Select-Object numofbytesremaining

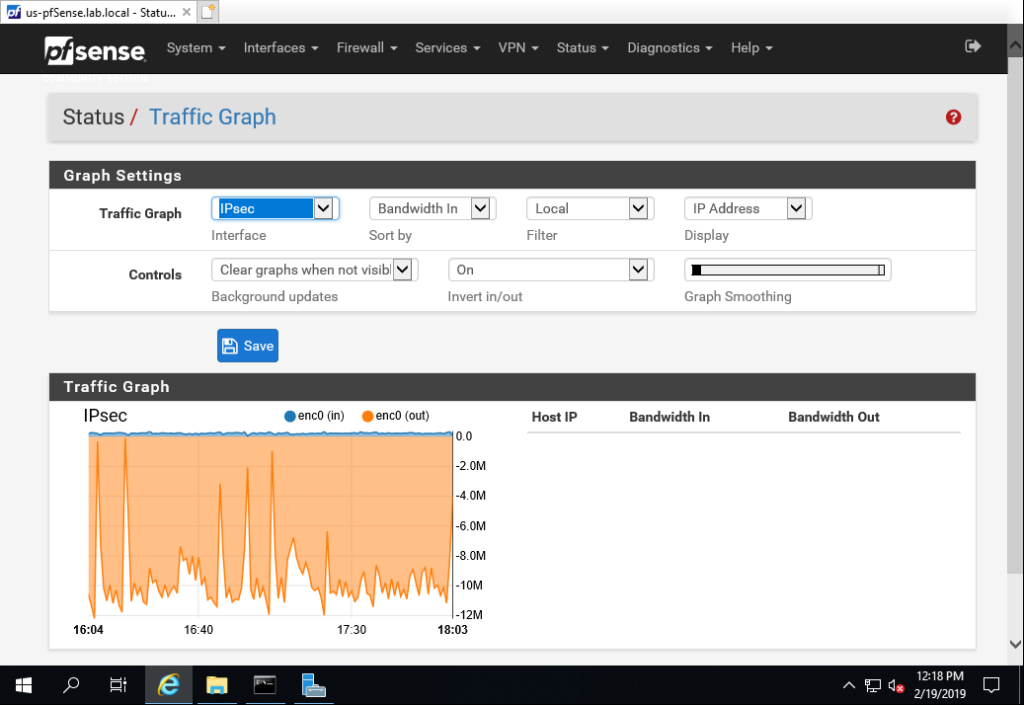

BTW we can also see traffic between Firewall on the IPsec interface

Once the initial synchronization is finished, the replication status is Continuously replicating.

Test Storage Replica

Now that everything is in place, we need to ensure that it’s working as expected.

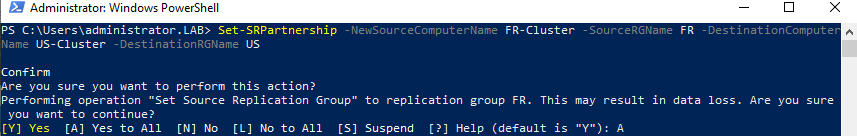

We will need to reverse the Storage Replica to allow FR to become the source and the disk to be mounted.

In US we have the following:

Run the following PoSH command

Set-SRPartnership -NewSourceComputerName FR-Cluster -SourceRGName FR -DestinationComputerName US-Cluster -DestinationRGName US

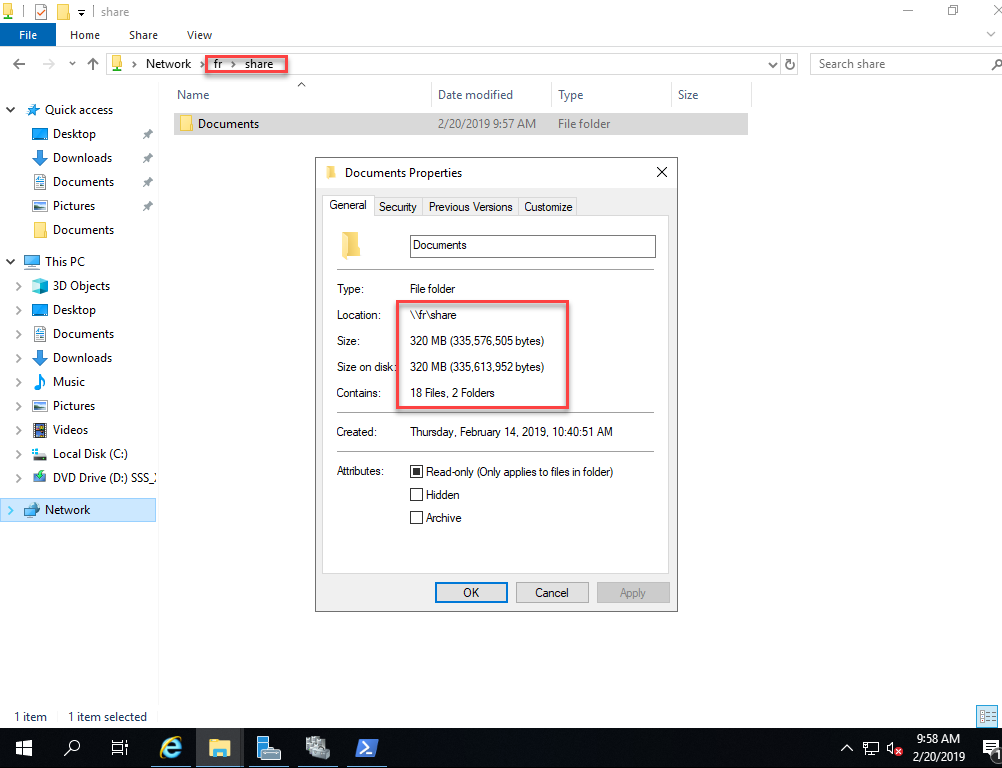

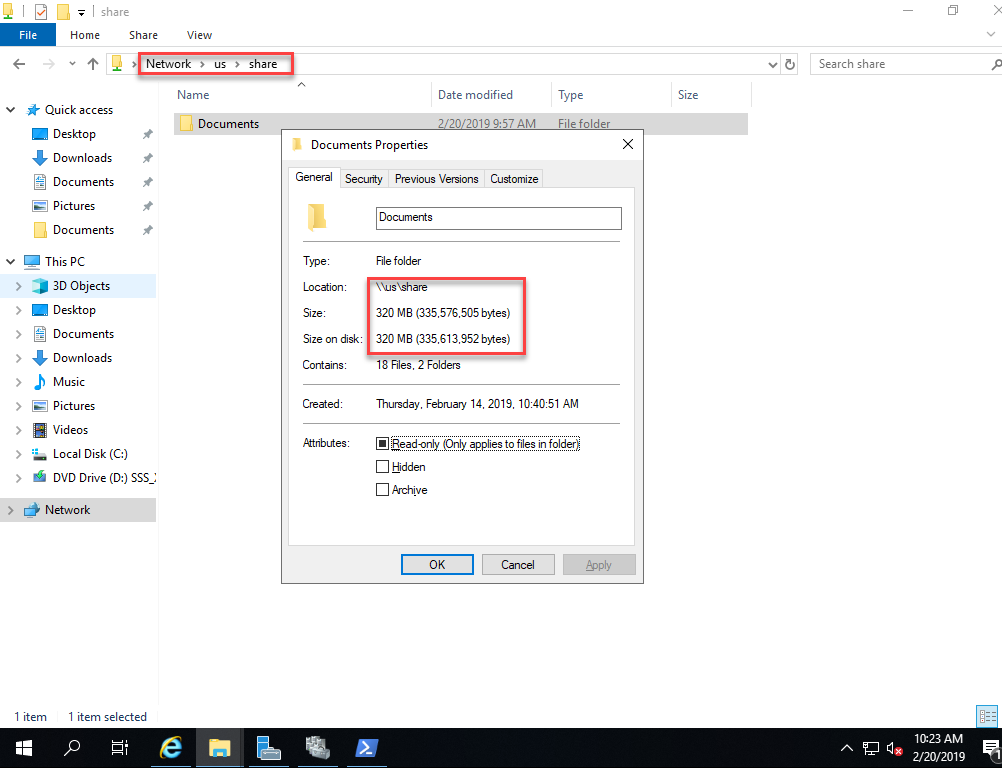

We can see files in FR and size and ensure it’s consistent from what we had before switching replication

As we simulate an outage and during outage data are written, we had some data in the folder and wait for replication to occur before switching back in US

We can see below that we had 3 Files and 83MB

Switch replication back to US

Set-SRPartnership -NewSourceComputerName US-Cluster -SourceRGName US -DestinationComputerName FR-Cluster -DestinationRGName FR

Validation

Notes from the Field

The disk for the Log should have a minimum recommended size of 8GB (however you can test with a smaller size)

During initial replication, the full size of the disk will be copy between source and replication. In our example with 30GB of disk + Logs we had the following:

Replication mode selection:

Storage Replica supports synchronous and asynchronous replication:

- Synchronous replication mirrors data within a low-latency network site with crash-consistent volumes to ensure zero data loss at the file-system level during a failure.

- Asynchronous replication mirrors data across sites beyond metropolitan ranges over network links with higher latencies, but without a guarantee that both sites have identical copies of the data at the time of a failure.

Windows Server 2016 and later includes an option for Cloud (Azure)-based Witness. You can choose this quorum option instead of the file share witness.

Key evaluation points and behaviors

- Network bandwidth and latency with fastest storage. There are physical limitations around synchronous replication. Because Storage Replica implements an IO filtering mechanism using logs and requiring network round trips, synchronous replication is likely make application writes slower. By using low latency, high-bandwidth networks as well as high-throughput disk subsystems for the logs, you minimize performance overhead.

- The destination volume is not accessible while replicating in Windows Server 2016. When you configure replication, the destination volume dismounts, making it inaccessible to any reads or writes by users. Its driver letter may be visible in typical interfaces like File Explorer, but an application cannot access the volume itself. Block-level replication technologies are incompatible with allowing access to the destination target’s mounted file system in a volume; NTFS and ReFS do not support users writing data to the volume while blocks change underneath them.

- The Microsoft implementation of asynchronous replication is different than most. Most industry implementations of asynchronous replication rely on snapshot-based replication, where periodic differential transfers move to the other node and merge. Storage Replica asynchronous replication operates just like synchronous replication, except that it removes the requirement for a serialized synchronous acknowledgment from the destination. This means that Storage Replica theoretically has a lower RPO as it continuously replicates. However, this also means it relies on internal application consistency guarantees rather than using snapshots to force consistency in application files. Storage Replica guarantees crash consistency in all replication modes

- Many customers use DFS Replication as a disaster recovery solution even though often impractical for that scenario – DFS Replication cannot replicate open files and is designed to minimize bandwidth usage at the expense of performance, leading to large recovery point deltas. Storage Replica may allow you to retire DFS Replication from some of these types of disaster recovery duties.

- Storage Replica is not backup. Some IT environments deploy replication systems as backup solutions, due to their zero data loss options when compared to daily backups. Storage Replica replicates all changes to all blocks of data on the volume, regardless of the change type. If a user deletes all data from a volume, Storage Replica replicates the deletion instantly to the other volume, irrevocably removing the data from both servers. Do not use Storage Replica as a replacement for a point-in-time backup solution.

- Storage Replica is not Hyper-V Replica or Microsoft SQL AlwaysOn Availability Groups. Storage Replica is a general purpose, storage-agnostic engine. By definition, it cannot tailor its behavior as ideally as application-level replication. This may lead to specific feature gaps that encourage you to deploy or remain on specific application replication technologies.